Ph.D. in Computer Science

University of Exeter

Student: Han Wu

Supervisors: Dr. Johan Wahlström and Dr. Sareh Rowlands

Expected completion date: 24/May/2024

Research Website

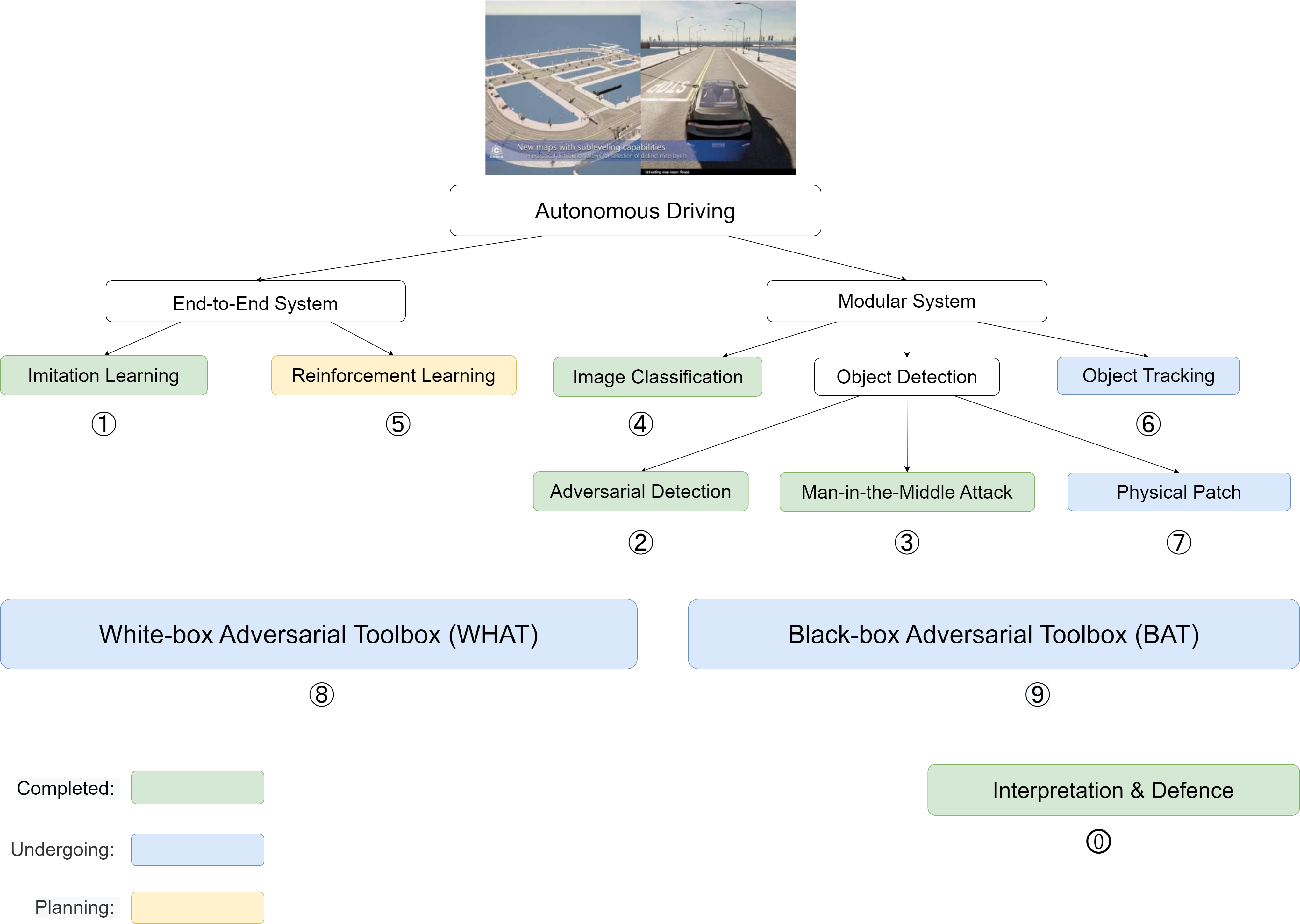

① Adversarial Driving: Attacking End-to-End Autonomous Driving System. [Paper]

② Adversarial Detection: Attacking Object Detection in Real Time. [Paper]

③ A Man-in-the-Middle Attack against Object Detection System. [Paper]

④ Distributed Black-box Attack against Image Classification. [Paper]

⑤ Coming soon.

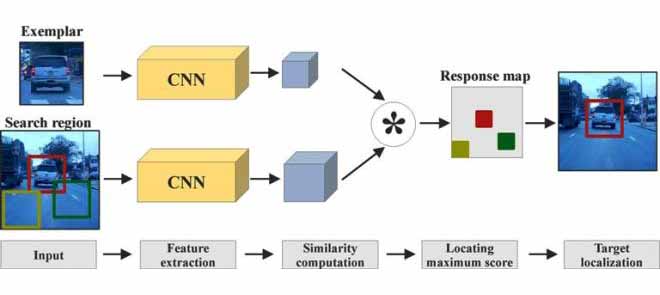

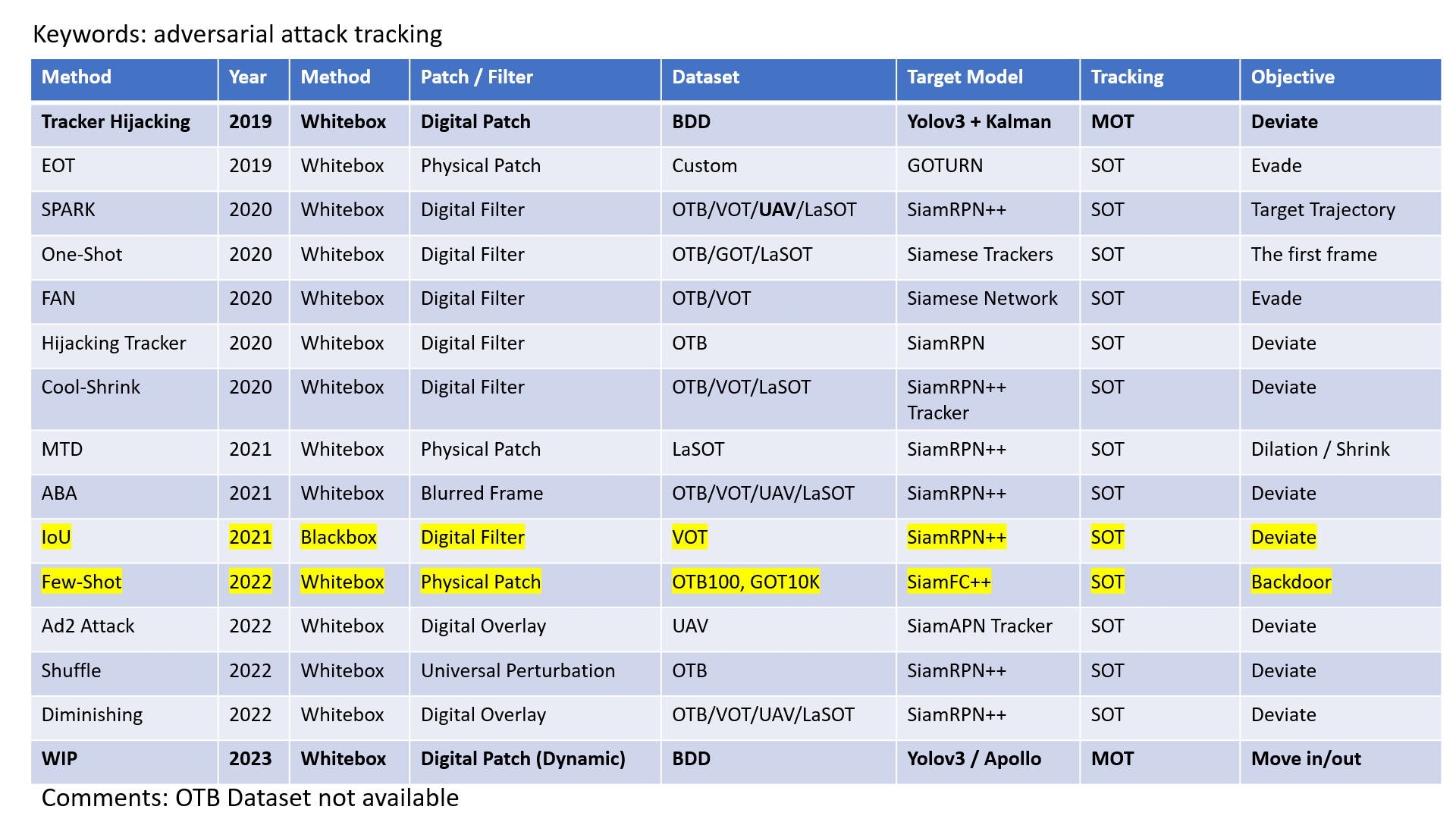

⑥ Adversarial Tracking: Real-time Adversarial Attacks against Object Tracking. [Proposal]

⑦ Adversarial Patch: Physical Patch in Carla Simulator. [Proposal]

⑧ A WHite-box Adversarial Toolbox (WHAT). [GitHub]

⑨ A Black-box Adversarial Toolbox (BAT). [GitHub]

🄋 Interpretable Machine Learning for COVID-19. [Paper]

Week 0

2022/08/15 - 2022/09/18

Three Demo Videos for ICRA 2023.

-

Adversarial Driving: https://driving.wuhanstudio.uk/

-

Adversarial Detection: https://detection.wuhanstudio.uk/

-

Man-in-the-Middle Attack: https://minm.wuhanstudio.uk/

Three Draft Papers for ICRA 2023:

-

Adversarial Driving: https://arxiv.org/abs/2103.09151

-

Adversarial Detection: https://arxiv.org/abs/2209.01962

-

Man-in-the-Middle Attack: https://arxiv.org/abs/2208.07174

The ICRA 2023 paper submission deadline is 15 September 2022.

One Draft Paper for MLSys 2023:

- Distributed Black-box Attack against Image Classification Cloud Services.

The MLSys 2023 paper submission deadline is 28 October 2022.

Week 1

2022/09/26 - 2022/10/02

Whether black-box attacks are real threats or just research stories?

MLSys 2023:

- Paper Submission: Friday, October 28, 2023 4pm ET

- Page Length: Up to 10 pages long, not including references (10 + n)

Survey

Black-box attacks rely on queries but attacking real-world image classification models in cloud services could cost 20,000 to 50,000 queries for a single attack, which means attacking a single image could cost $480 - $1200 and 5-14 hours (Ilyas et al.) and the attack is not guaranteed to succeed (the success rate is not 100%).

The following evaluation metrics are important for black-box attacks:

- Success Rate: Initial research focused on improving the success rate.

- Number of Queries: Recent research interests shifted to reducing the number of queries.

- Time Cost: I notice that reducing the number of queries is not the only way to accelerate black-box attacks (also possible via distributed queries).

In a survey paper (Bhambri et al.), black-box attacks are classified into:

- Gradient-based Methods

- Local Search

- Combinatorics

- Transferability

Problems

For black-box attacks against cloud services:

- The more queries we sent simultaneously, the faster the attack is.

- Do we need to start from scratch every time we attack the same model?

- Experiments: we shouldn't assume access to pre-processing methods.

Plan

Week 1 - Week 5 (Total: 5 weeks)

Week 1:

-

Local Search

- SimBA (2019)

- Square Attack (2020)

Week 2:

-

Gradient Estimation

- Limited Query (2018)

- Bandits (2019)

-

The Draft for MLSys 2023. (Introduction)

Week 3: The Draft for MLSys 2023. (Methodology)

Week 4: The Draft for MLSys 2023. (Experiment)

Week 5: Revision & Submission.

Week 2

2022/10/03 - 2022/10/09

Acceleration Ratio

The distributed square attack can reduce the attack time from ~15h to ~4h.

Source Code: https://github.com/wuhanstudio/adversarial-classification

Common Mistakes

While implementing distributed black-box attacks, I noticed that some prior research made several common mistakes in their code. Their methods outperformed state-of-the-art partly because these mistakes gave them access to extra information that should not be available for black-box attacks. For example:

- They apply the perturbation after image resizing, assuming they know the input image size of a black-box model.

- The bandit attack does not clip the image while estimating gradients, assuming they can send invalid images (pixel value > 255 or < 0) to the black-box model.

Besides these mistakes, some methods are less effective while attacking real-world image classification APIs:

-

They assume they can perturb a pixel value from 105 to 105.45 (float), while real-world input images are 8-bit integers, thus some perturbations are mitigated after data type conversion: int(105.45) == 105.

-

Cloud APIs accept JPEG or PNG images (lossy compression) as input, while prior research assumes they can add perturbations to the raw pixels. After JPEG encoding, some adversarial perturbation gets lost.

As a result, some prior research compared their methods with others under on unfair settings. To prevent making these mistakes, I designed my own image classification cloud service for further research, so that we have a fair comparison.

Why they made these mistakes

They tested their attacks against local models on their computers (they have access to the file model.h5), and rely on themselves to restrain access to model information. Intentionally or unintentionally, they exploit extra information to improve their methods. For example:

- A Pytorch / Tensorflow model makes predictions using the function

model.predict(X), and the function only accepts input imagesXas arrays. We can't stack images of different shapes to be an array, thus they resize the images to be the same size so that the function won't give errors, which is a mistake. - The function

model.predict(X)won't give errors even if the imageXcontains negative values. Thus they are unaware that their methods generate invalid images. - The function

model.predict(X)accepts float numbers, thus they did not convert their input images to be integers. - The function

model.predict(X)accepts raw images, thus they did not encode their inputs.

Plan

Week 1:

-

Local Search

[●] SimBA (2019)

[●] Square Attack (2020)

Week 2:

-

Gradient Estimation

[ ] Limited Query (2018) (NES Gradient Estimation)

[●] Bandits (2019) (The Data and Time priors)

-

Cloud Service APIs

[●] Cloud Vision (Google)

[●] Imagga

[●] Deep API (ours)

-

The Draft for MLSys 2023. (Introduction)

Week 3

2022/10/10 - 2022/10/16

Most experiments were completed.

1. Pre-processing

Experiment Settings:

-

Attacking 1000 images in the ImageNet dataset.

-

$L_{\inf}$ = 0.05

-

Max number of queries for each image = 1,000

Model Accuracy (1000 samples):

| Environment | Inception v3 | Resnet 50 | VGG16 |

|---|---|---|---|

| Local Model | 75.90% | 70.90% | 65.20% |

| DeepAPI | 75.70% | 70.80% | 65.00% |

Before Pre-processing (Local):

| Attack | Avg. Queries | Attack Success Rate | Total Queries | ||||||

|---|---|---|---|---|---|---|---|---|---|

| I | R | V | I | R | V | I | R | V | |

| SimBA | 745.40 | 690.50 | 630.75 | 3.04% | 4.33% | 5.13% | 745,000 | 691,000 | 631,000 |

| Square | 178.40 | 88.47 | 102.10 | 91.30% | 97.46% | 95.40% | 135,000 | 62,700 | 66,400 |

| Bandits | 520.40 | 403.00 | 382.10 | 41.37% | 55.01% | 57.52% | 520,000 | 403,000 | 382,000 |

After Pre-processing (Cloud):

| Attack | Avg. Queries | Attack Success Rate | Total Queries | ||||||

|---|---|---|---|---|---|---|---|---|---|

| I | R | V | I | R | V | I | R | V | |

| SimBA | 744.75 | 697.60 | 633.25 | 3.33% | 3.64% | 8.9% | 745,000 | 697,000 | 633,000 |

| Square | 290.50 | 194.15 | 223.60 | 83.01% | 92.15% | 88.30% | 222,000 | 138,700 | 145,100 |

| Bandits | 683.35 | 639.55 | 596.20 | 10.80% | 11.17% | 9.47% | 681,000 | 639,000 | 596,000 |

2. Distributed Queries

Distributed queries (8 workers):

| Cloud Service | 1 query | 2 queries | 10 queries | 20 queries |

|---|---|---|---|---|

| Cloud Vision | 446.68ms | 353.61ms | 684.25ms | 1124.82ms |

| Imagga | 1688.11ms | 2331.81ms | 10775.75ms | 21971.77ms |

| DeepAPI (ours) | 538.47ms | 643.17ms | 1777.81ms | 1686.36ms |

3. Distributed Attacks

Experiment Settings:

-

Attacking 100 images in the ImageNet dataset.

-

$L_{\inf}$ = 0.05

-

max number of queries for each image = 1,000

3.1 Non-Distributed:

| Attack | Avg. Queries | Attack Success Rate | Total Queries | Time (min) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| I | R | V | I | R | V | I | R | V | I | R | V | |

| SimBA | 775.10 | 739.60 | 729.70 | 2.56% | 4.00% | 2.70% | 77,500 | 74,000 | 73,000 | 662 | 638 | 657 |

| Square | 359.80 | 200.10 | 227.60 | 78.21% | 93.33% | 91.89% | 28,100 | 15,400 | 16,800 | 301 | 81 | 175 |

| Bandits | 730.30 | 688.30 | 697.70 | 7.69% | 9.33% | 6.76% | 73,000 | 68,800 | 69,800 | 758 | 629 | 670 |

3.2 Horizontal Distribution:

| Attack | Avg. Queries | Attack Success Rate | Total Queries | Time (min) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| I | R | V | I | R | V | I | R | V | I | R | V | |

| SimBA | 772.50 | 738.80 | 727.70 | 2.56% | 2.67% | 2.70% | 77,300 | 73,900 | 72,800 | 148 | 133 | 226 |

| Square | 359.80 | 204.70 | 222.80 | 78.21% | 93.33% | 90.54% | 28,100 | 15,400 | 16,500 | 48 | 24 | 58 |

| Bandits | 740.50 | 672.60 | 706.80 | 5.13% | 10.67% | 5.41% | 73,700 | 67,000 | 70,400 | 221 | 202 | 292 |

3.3 Vertical Distribution:

| Attack | Avg. Queries | Attack Success Rate | Total Queries | ||||||

|---|---|---|---|---|---|---|---|---|---|

| I | R | V | I | R | V | I | R | V | |

| SimBA | |||||||||

| Square | |||||||||

| Bandits |

Plan

-

Week 4: Draft

-

Week 5: Revision and Submission

Week 4

2022/10/17 - 2022/10/23

Completed all experiments except vertical distribution (need one more day).

The experiments used up $300 free tier provided by Microsoft Azure.

Plan

- Week 5: Revision and Submission

Week 5

2022/10/24 - 2022/10/30

One Draft Paper for MLSys 2023:

- Distributed Black-box Attack against Image Classification Cloud Service: PDF

One Demo Video for MLSys 2023.

- Adversarial Classification: https://distributed.wuhanstudio.uk/

Plan

- Week 6: Reinforcement Learning

Week 6

2022/10/31 - 2022/11/06

Reinforcement Learning

Textbook (Sutton & Barto):

Online Courses:

- https://www.coursera.org/specializations/reinforcement-learning

- https://www.udemy.com/course/beginner-master-rl-1

Week 7

2022/11/07 - 2022/11/13

1. Reinforcement Learning

Trade-off between exploration and exploitation.

1.1 Tabular Solution

The state and action spaces are small enough for the approximate value functions to be represented as arrays or tables.

- Finite Markov Decision Process

- Dynamic Programming

- Monte Carlo Methods

TD learning combines some of the features of both Monte Carlo and Dynamic Programming (DP) methods.

- Temporal-Difference Learning

- n-step Bootsrapping

1.2 Approximate Solution Methods

Problems with arbitrary large state spaces.

-

Prediction Task: Evaluate a given policy by estimating the value of taking actions following the policy.

-

Control Task: Find the optimal policy that gets most rewards.

-

On-policy: Estimate the value of a policy while using it for control.

-

Off-policy: The policy used to generate behaviour, called the behaviour policy, may be unrelated to the policy that is evaluated and improved, called the estimation policy.

- On-policy TD Prediction

- TD(0)

- On-policy TD Control

- SARSA

- Off-policy TD Control

- Q-Learning

- Dyna-Q and Dynq-Q+

- Expected SARSA

- Policy Gradient Methods

- REINFORCE

- Actor-Critic

- Advantage Actor-Critic (A2C)

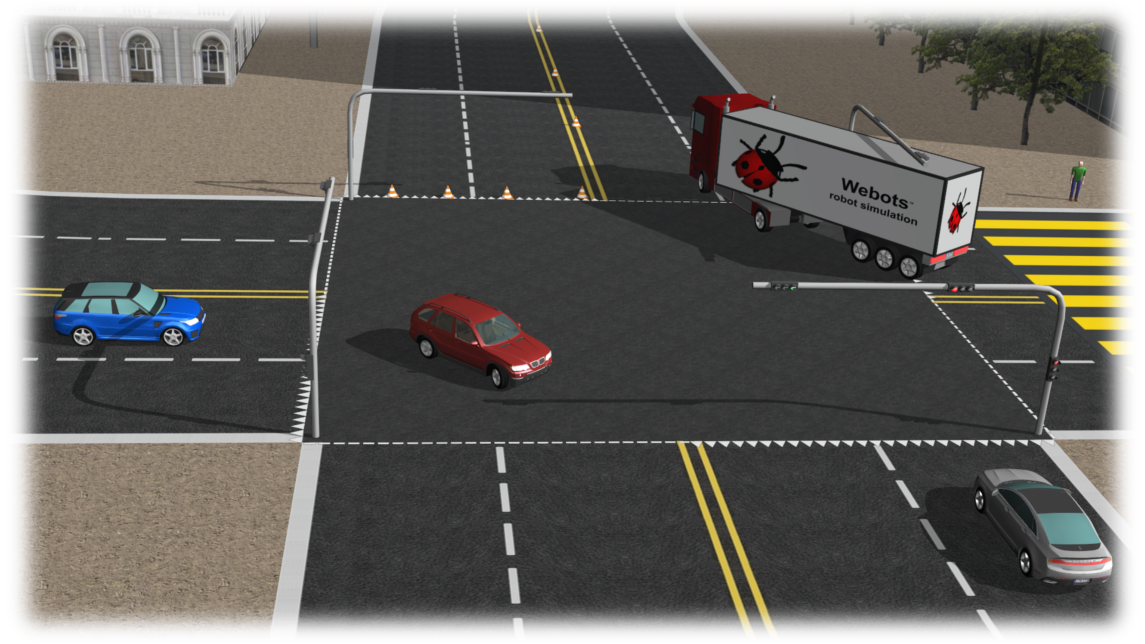

2. Webots Simulator (ROS2)

Multi-agent Reinforcement Learning.

Week 8

2022/11/14 - 2022/11/20

Multi-Agent Reinforcement Learning (MARL)

Trade-off between exploration and exploitation.

Paper:

Books:

- RL Theory: Reinforcement Learning (Sutton & Barto, 2nd Edition)

- RL Practice: Reinforcement Learning: Industrial Applications of Intelligent Agents

Environments:

Week 9

2022/11/21 - 2022/11/27

Travel

- Exeter --> London --> Hong Kong

Week 10

2022/11/28 - 2022/12/04

Travel

- Hong Kong (self-isolation)

Week 11

2022/12/05 - 2022/12/11

Travel

- Shen Zhen (self-isolation)

Week 12

2022/12/12 - 2022/12/18

Travel

- Visiting Student at SUSTech

Week 13

2022/12/19 - 2022/12/25

Travel

- Visiting Student at SUSTech

Week 14

2022/12/26 - 2023/01/01

Travel

- Guangzhou --> Singapore --> London --> Exeter

Week 0

2023/01/02 - 2023/01/08

Research Plan

- Milestone 1: Reinforcement Learning: Is reinforcement-learning-based end-to-end driving model secure?

- Milestone 2: Adversarial Tracking: Real-time adversarial attacks against Object Tracking.

- Milestone 3: Adversarial Patch: Physical Patch in Carla Simulator.

Week 1

2023/01/09 - 2023/01/15

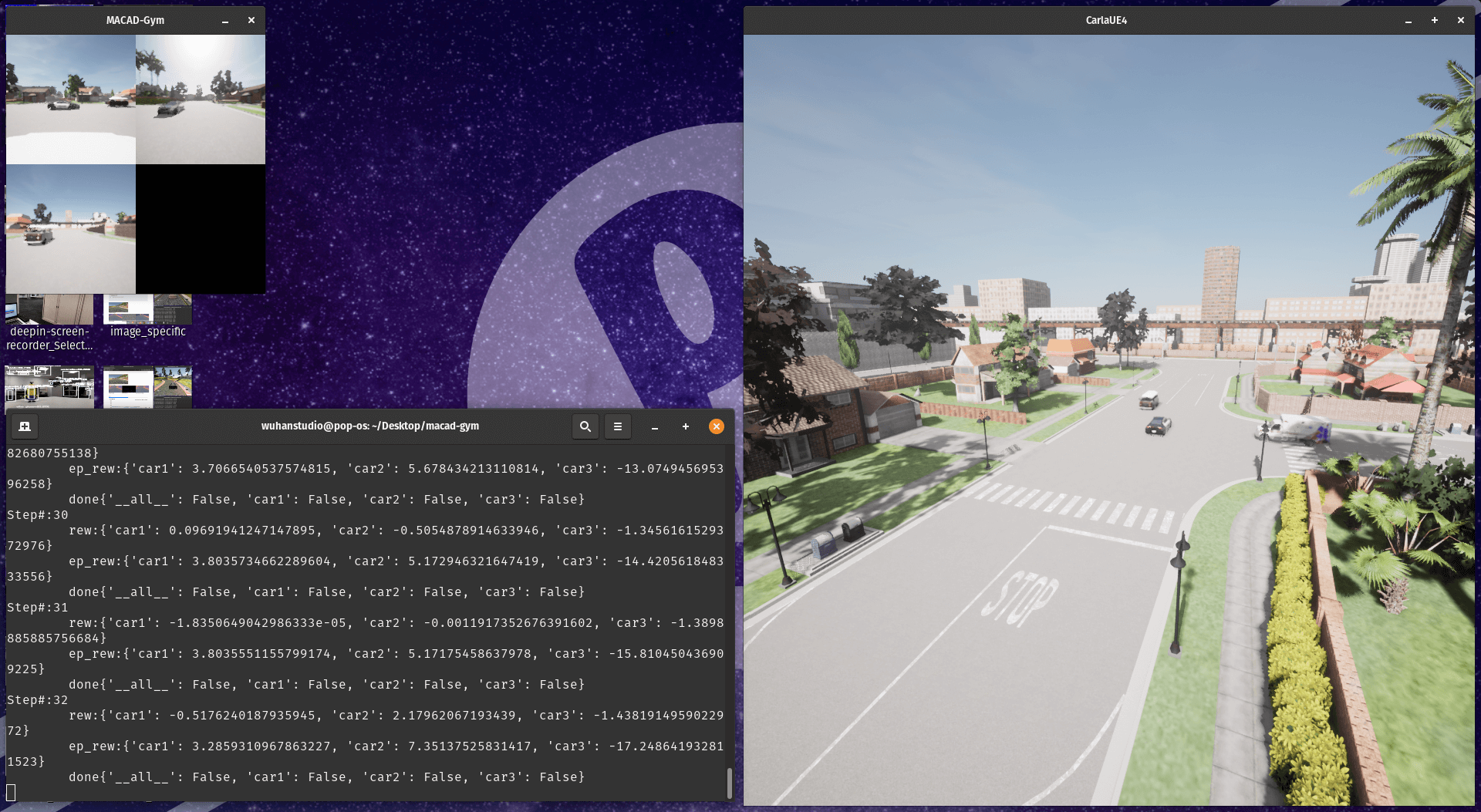

Multi-Agent Connected Autonomous Driving (MACAD)

- A Review: https://arxiv.org/abs/2203.07676

- An RL env: https://github.com/praveen-palanisamy/macad-gym

OpenAI Gym environment

-

Environments:

-

Hete Ncom | Inde | PO Intrx MA | TLS 1B2C1P TWN3-v0

-

Homo Ncom | Inde | PO Intrx MA | SS 3C TWN3-v0

-

-

Observation Space: Images (168 x 168 x 3).

-

Action Space: 9 discrete actions.

RL Methods

Baseline

Discrete Action Space:

Continuous Action Space:

Advanced

Continuous Action Space:

Other Platforms

Week 2

2023/01/16 - 2023/01/22

MLSys 2023 (Author Feedback)

There will be another discussion before making the final decision (17th Feb.).

Future Plan

IROS (Mar. 2023)

- Adversarial Driving

- End-to-End Imitation Learning.

- End-to-End Reinforcement Learning.

- Adversarial Detection

IEEE Journal & Conference

- IEEE Intelligent Vehicle Symposium

- IEEE Intelligent Transportation Systems Conference.

ICCV (Jun. 2023) or BMVC (Jul. 2023)

- Man-in-the-Middle Attack (WHAT)

- Distributed Black-box Attack (BAT)

CVPR (Nov. 2023) or IJCAI (Jan. 2024)

- Adversarial Tracking (Carla)

- Adversarial Patch (Carla)

Week 3

2023/01/23 - 2023/01/29

IEEE Intelligent Vehicle Symposium

IEEE Conference

- Paper Submission Deadline: February 01, 2023.

- Page Limit: At most 8 pages.

Re-structured two manuscripts:

-

Adversarial Driving

- Added more references.

- Highligted the difference between online and offline attacks.

- Added experiments on the FPS of the attack (CPU and GPU).

-

Adversarial Detection

- Added more references.

- Added experiments on the FPS of the attack (GPU).

Week 4

2023/01/30 - 2023/02/05

IEEE Intelligent Vehicle Symposium

Notification of Acceptance March 30, 2023.

- Adversarial Driving

- Adversarial Detection

Reinforcement Learning

[●] Deep SARSA

[●] Deep Q Network

[ ] REINFORCE

[ ] A2C / A3C

[ ] DDPG

[ ] TRPO & PPO

[ ] SAC

[ ] TD3

Week 5

2023/02/06 - 2023/02/12

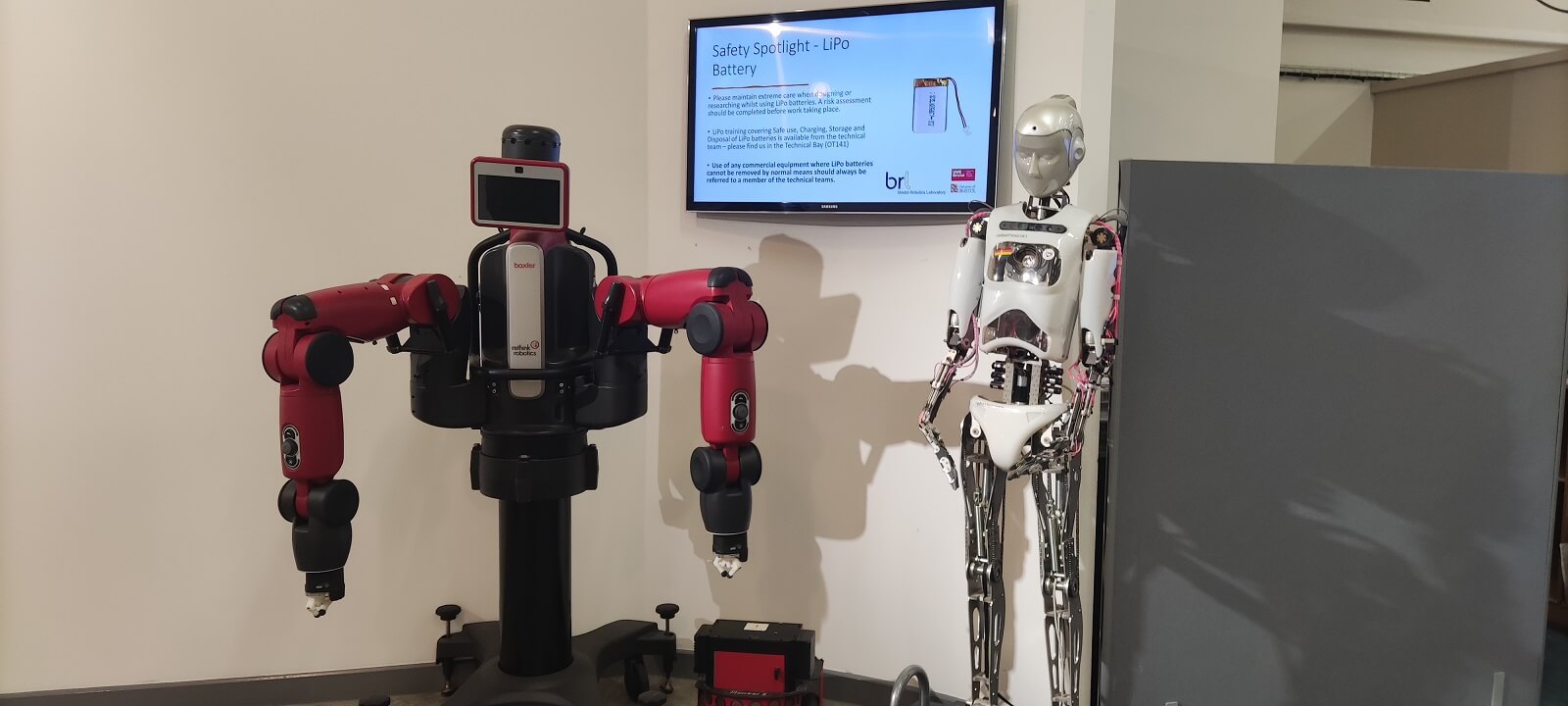

UWE Bristol

Adversarial Attacks

- Adversarial Driving

- Adversarial Detection

- [22/May] Third-Year Report

- Imitation Learning vs Reinforcement Learning

- A Man-in-the-Middle Attack (WHAT)

- Distributed Black-box Attack (BAT)

- Adversarial Tracking (Carla)

- Physical Patch (Carla)

Adversarial RL

- Carla Leaderboard

- Evaluating the Robustness of Deep Reinforcement Learning for Autonomous and Adversarial Policies in a Multi-agent Urban Driving Environment

- Adversarial Deep Reinforcement Learning for Improving the Robustness of Multi-agent Autonomous Driving Policies

Reinforcement Learning

[●] Deep SARSA

[●] Deep Q Network

[●] REINFORCE

[●] A2C / A3C

[ ] DDPG

[ ] TRPO & PPO

[ ] SAC

[ ] TD3

Week 6

2023/02/13 - 2023/02/19

OpenEuler Meetup

Recorded video will be available on YouTube and Bilibili this Thursday.

MSc Project

-

Real-time End-to-End Driving

- Wei Yu

- Jinming Wang

-

Real-time Vehicle Tracking

- Bhavana

- Jagadeesh

Deep Q Network

- Double DQN

- Dueling DQN

- Prioritized Experience Replay

- Noisy DQN

- N-step DQN

- Distributional DQN

Reinforcement Learning

[●] Deep SARSA

[●] Deep Q Network

[●] REINFORCE

[●] A2C / A3C

[ ] DDPG

[ ] TRPO & PPO

[ ] SAC

[ ] TD3

Week 7

2023/02/20 - 2023/02/26

Paper Submission

[IEEE IV] Decision: 30 March

- Adversarial Driving

- Adversarial Detection

[IEEE ITS] Submission: 13 May

- Adversarial Driving

- Adversarial Detection

[BMVC] Submission: 12 May

- Man-in-the-Middle Attack

- Distributed Black Box Attack

[Third-year Report] Submission: 24 May

[ICLR / CVPR] Submission: Sep 2024

- Adversarial Tracking

- Physical Patch

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Object Tracking

- May. WHAT & BAT (Resubmission)

HPC ( JADE 2 Cluster )

Hartree Centre Login

https://um.hartree.stfc.ac.uk/hartree/login.jsp

The Slurm Scheduler

https://docs.jade.ac.uk/en/latest/jade/scheduler/

Allocate a temporary node with 8 GPUS:

srun --gres=gpu:8 --pty bash

Allocate a small partition with 1 GPU:

srun --gres=gpu:1 -p small --pty bash

# 20 CPU Cores / 40 Threads

# 32 GB VRAM / 512 GB RAM

Submit and monitor jobs:

sbatch

sacct

squeue

scancel

Deep Q Network

- Double DQN

- Dueling DQN

- Prioritized Experience Replay

- Noisy DQN

- N-step DQN

- Distributional DQN

Reinforcement Learning

[●] Deep SARSA

[●] Deep Q Network

[●] REINFORCE

[●] A2C / A3C

[●] DDPG

[●] TRPO & PPO

[ ] SAC

[ ] TD3

Week 8

2023/02/27 - 2023/03/05

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Object Tracking

- May. WHAT & BAT (Resubmission)

Reinforcement Learning

Four Courses on Coursera:

Four Courses on Udemy:

Two Books:

- RL Theory: Reinforcement Learning (Sutton & Barto, 2nd Edition)

- RL Practice: Reinforcement Learning: Industrial Applications of Intelligent Agents

Deep Q Network

- Double DQN

- Dueling DQN

- Prioritized Experience Replay

- Noisy DQN

- N-step DQN

- Distributional DQN

Reinforcement Learning

[●] Deep SARSA

[●] Deep Q Network

[●] REINFORCE

[●] A2C / A3C

[●] GAE / NAF / HER

[●] DDPG

[●] TRPO & PPO

[●] SAC

[●] TD3

Week 9

2023/03/06 - 2023/03/12

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Man-in-the-Middle - WHAT (Resubmission)

- May. Distributed Black-Box - BAT (Resubmission)

Adversarial Tracking

[●] Object Tracking (Computer Vision)

[ ] Vehicle Tracking (Autonomous Driving)

[ ] Adversarial Tracking

[ ] Adversarial Patch

Week 10

2023/03/13 - 2023/03/19

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Man-in-the-Middle - WHAT (Resubmission)

- May. Distributed Black-Box - BAT (Resubmission)

Research Papers

Papers Accepted (x3):

- Interpretable Machine Learning for COVID-19, IEEE Trans on AI.

- Adversarial Driving: Attacking End-to-End Autonomous Driving System, IEEE Intelligent Vehicle.

- Adversarial Detection: Attacking Object Detection in Real Time, IEEE Intelligent Vehicle.

Papers to be submitted (x2):

- A Man-in-the-Middle Attack against Object Detection System.

- Distributed Black-box Attack against Image Classification Cloud Services.

Papers to be written (x2):

- Adversarial Tracking: Real-time Adversarial Attacks against Object Tracking.

- Adversarial Patch: Physical Patch in Carla Simulator.

Undetermined (x2):

- Reinforcement Learning.

- Interpretation and Defence.

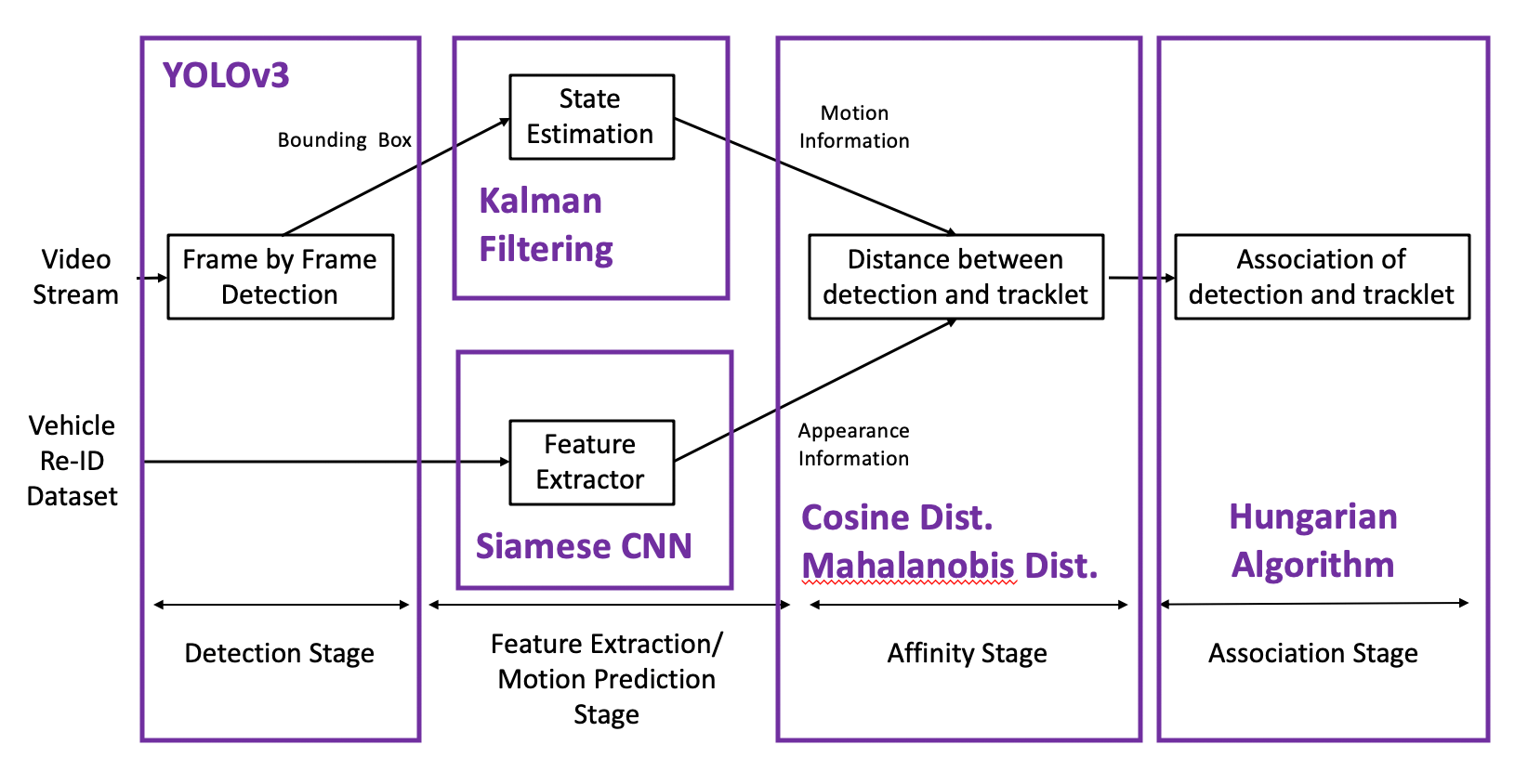

Adversarial Tracking

[●] Object Tracking (Computer Vision)

[●] Vehicle Tracking (Autonomous Driving)

[ ] Adversarial Tracking

[ ] Adversarial Patch

Week 11

2023/03/20 - 2023/03/26

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Man-in-the-Middle - WHAT (Resubmission)

- May. Distributed Black-Box - BAT (Resubmission)

Adversarial Tracking

[●] Object Tracking (Computer Vision)

[●] Vehicle Tracking (Autonomous Driving)

[●] Adversarial Tracking (Research Proposal)

[ ] Adversarial Patch

Week 12

2023/03/27 - 2023/04/02

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Man-in-the-Middle - WHAT (BMVC)

- May. Distributed Black-Box - BAT (BMVC)

Research Papers

Papers Accepted (x3):

- Interpretable Machine Learning for COVID-19, IEEE Trans on AI.

- Adversarial Driving: Attacking End-to-End Autonomous Driving System, IEEE Intelligent Vehicle.

- Adversarial Detection: Attacking Object Detection in Real Time, IEEE Intelligent Vehicle.

Papers to be submitted (x2):

- A Man-in-the-Middle Attack against Object Detection System.

- Distributed Black-box Attack against Image Classification Cloud Services.

Papers to be written (x2):

- Adversarial Tracking: Real-time Adversarial Attacks against Object Tracking.

- Adversarial Patch: Physical Patch in Carla Simulator.

Adversarial Tracking

[●] Object Tracking (Computer Vision)

[●] Vehicle Tracking (Autonomous Driving)

[●] Adversarial Tracking (Research Proposal)

[●] Adversarial Patch (Research Proposal)

Week 13

2023/04/03 - 2023/04/09

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Man-in-the-Middle - WHAT (BMVC)

- May. Distributed Black-Box - BAT (BMVC)

IEEE IV 2023

[●] The T1 System

[●] Conference Registration

[●] Final Manuscript

[●] Invitation Letter

[ ] The US Visa Application

[ ] Flight Tickets

[ ] Accommodation

BMVC 2023

[ ] Man-in-the-Middle Attack

[ ] Distributed Black-box Attack

Week 14

2023/04/10 - 2023/04/16

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Man-in-the-Middle - WHAT (BMVC)

- May. Distributed Black-Box - BAT (BMVC)

IEEE IV 2023

[●] The T1 System

[●] Conference Registration

[●] Final Manuscript

[●] Invitation Letter

[●] The US Visa Application

[ ] Flight Tickets

[●] Accommodation

BMVC 2023

[ ] Man-in-the-Middle Attack

[ ] Distributed Black-box Attack

Week 15

2023/04/17 - 2023/04/23

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Man-in-the-Middle - WHAT (BMVC)

- May. Distributed Black-Box - BAT (BMVC)

IEEE IV 2023

[●] The T1 System

[●] Conference Registration

[●] Final Manuscript

[●] Invitation Letter

[●] The US Visa Application

[ ] Flight Tickets

[●] Accommodation

BMVC 2023

[ ] Man-in-the-Middle Attack (9 pages)

[ ] Distributed Black-box Attack (9 pages)

Week 16

2023/04/24 - 2023/04/30

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Man-in-the-Middle - WHAT (BMVC)

- May. Distributed Black-Box - BAT (BMVC)

IEEE IV 2023

[●] The T1 System

[●] Conference Registration

[●] Final Manuscript

[●] Invitation Letter

[●] The US Visa Application

[ ] Flight Tickets

[●] Accommodation

BMVC 2023

[ ] Man-in-the-Middle Attack (9 pages) [Toolbox]

[ ] Distributed Black-box Attack (9 pages) [Toolbox]

Week 17

2023/05/01 - 2023/05/07

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Man-in-the-Middle - WHAT (BMVC)

- May. Distributed Black-Box - BAT (BMVC)

IEEE IV 2023

[●] The T1 System

[●] Conference Registration

[●] Final Manuscript

[●] Invitation Letter

[●] The US Visa Application

[ ] Flight Tickets

[●] Accommodation

BMVC 2023

[●] The hardware attack (Buildroot and Raspi OS)

[ ] Man-in-the-Middle Attack (9 pages) [Toolbox]

[ ] Distributed Black-box Attack (9 pages) [Toolbox]

Week 18

2023/05/08 - 2023/05/14

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Man-in-the-Middle - WHAT (BMVC)

- May. Distributed Black-Box - BAT (BMVC)

IEEE IV 2023

[●] The T1 System

[●] Conference Registration

[●] Final Manuscript

[●] Invitation Letter

[●] The US Visa Application

[ ] Flight Tickets

[●] Accommodation

[ ] Adversarial Driving (oral)

[ ] Adversarial Detection (poster)

BMVC 2023

[●] The third-year report

[●] The hardware attack (Buildroot and Raspi OS)

[●] Man-in-the-Middle Attack (9 pages) [Toolbox]

[●] Distributed Black-box Attack (9 pages) [Toolbox]

Week 19

2023/05/15 - 2023/05/21

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Man-in-the-Middle - WHAT (BMVC)

- May. Distributed Black-Box - BAT (BMVC)

IEEE IV 2023

[●] The T1 System

[●] Conference Registration

[●] Final Manuscript

[●] Invitation Letter

[●] The US Visa Application

[ ] Flight Tickets

[●] Accommodation

[●] Adversarial Driving (oral)

[●] Adversarial Detection (poster)

BMVC 2023

[●] The third-year report

[●] The hardware attack (Buildroot and Raspi OS)

[●] Man-in-the-Middle Attack (9 pages) [Toolbox]

[●] Distributed Black-box Attack (9 pages) [Toolbox]

Week 20

2023/05/22 - 2023/05/28

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Object Tracking

- Apr. Man-in-the-Middle - WHAT (BMVC)

- May. Distributed Black-Box - BAT (BMVC)

IEEE IV 2023

[●] The T1 System

[●] Conference Registration

[●] Final Manuscript

[●] Invitation Letter

[●] The US Visa Application

[x] Flight Tickets

[x] Accommodation

[●] Adversarial Driving (oral)

[●] Adversarial Detection (poster)

[●] Substitute Presenters

BMVC 2023

[●] The third-year report

[●] The hardware attack (Buildroot and Raspi OS)

[●] Man-in-the-Middle Attack (9 pages) [Toolbox]

[●] Distributed Black-box Attack (9 pages) [Toolbox]

Week 21

2023/05/29 - 2023/06/04

Talk

- IEEE IV 2023 (Talk Slides)

- BMVC 2023 (Under Review)

- RTT GDC 2023 (Talk Slides)

- PGR Conference (Talk Slides)

Toolbox

Research Plan

- Jun. Adversarial Tracking

Week 22

2023/06/05 - 2023/06/11

Research Plan

- Jun. Adversarial Tracking

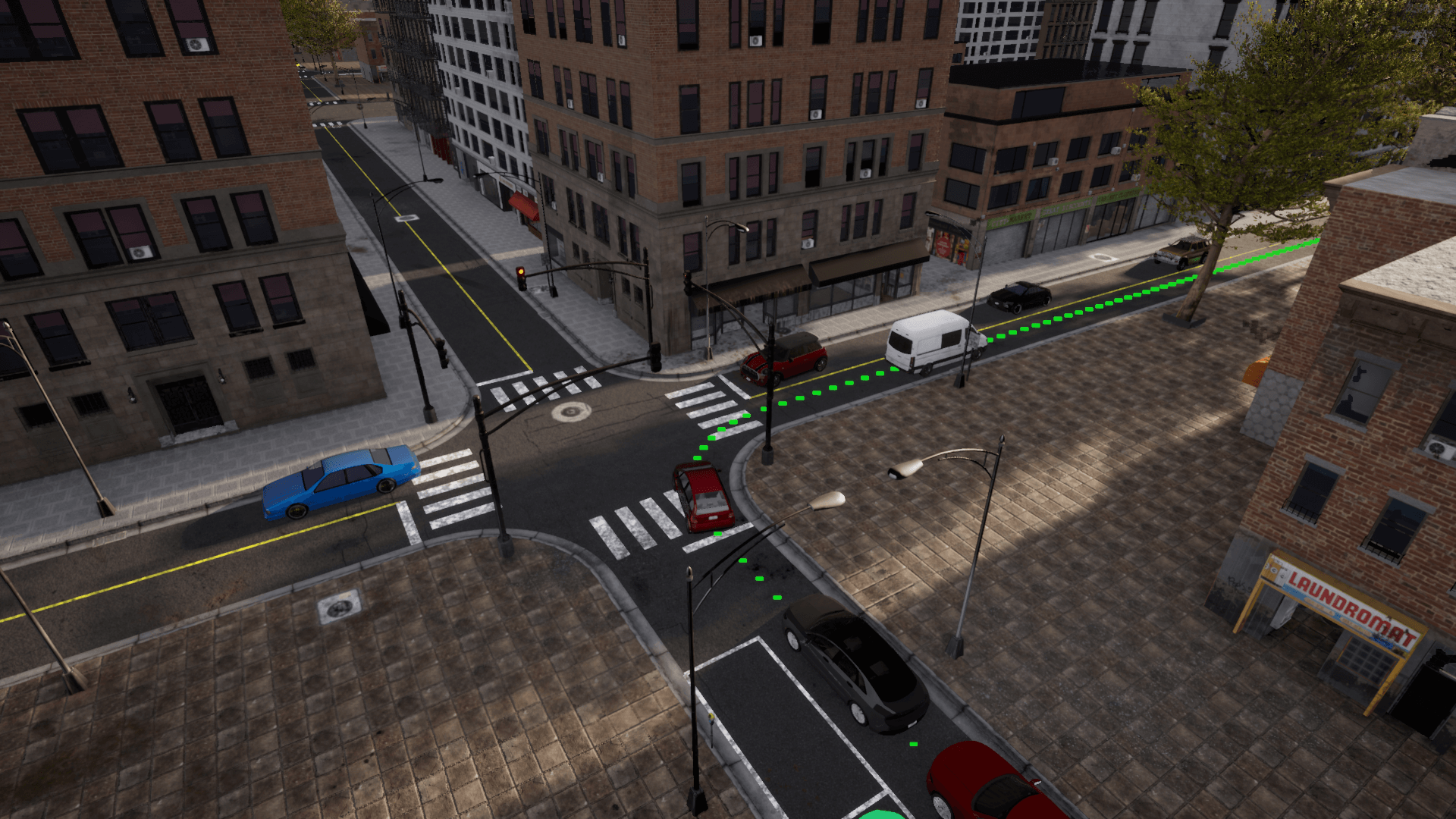

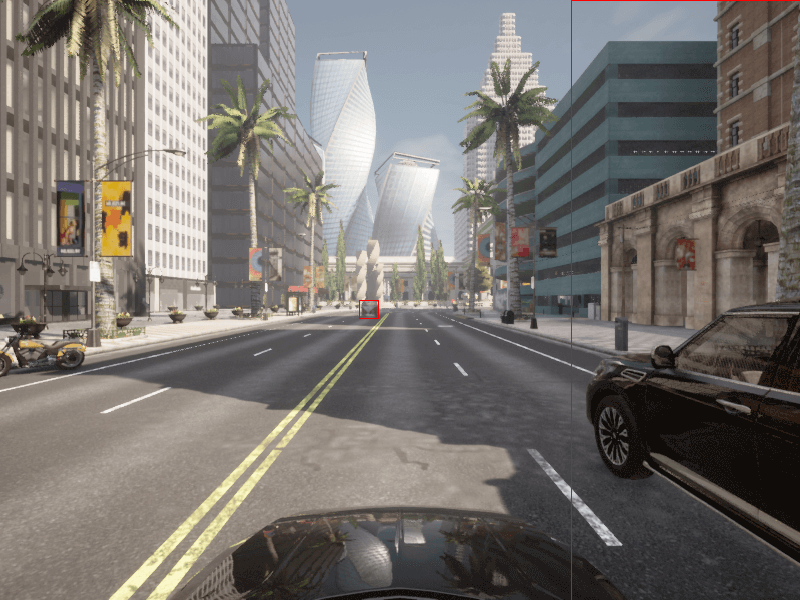

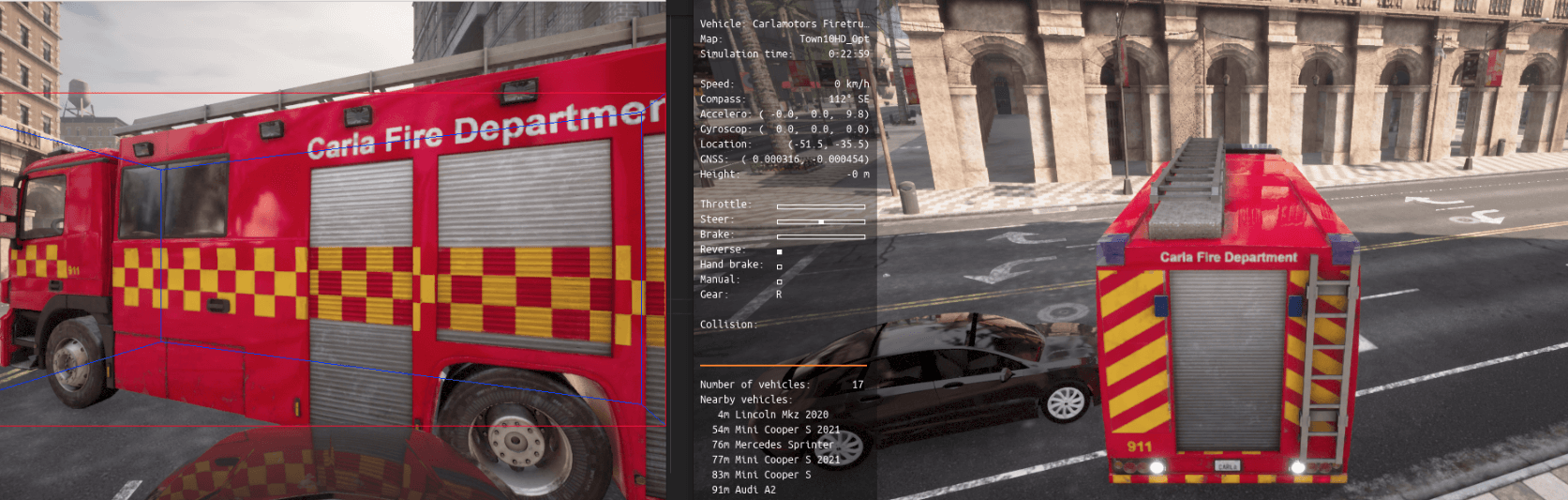

Environment

- Carla 0.9.14

- Python 3.8

- ROS Bridge

- Carla Leaderboard

- Open SCENARIO

- LAV Driving Model

Target Model

Adversarial Attacks

- Universal Adversarial Perturbation (UAP)

Next Step

- Learning from All Vehicles

- Multimodal 3D Object Detection from Simulated Pretraining

- Joint Monocular 3D Vehicle Detection and Tracking

- Monocular Quasi-Dense 3D Object Tracking

Week 23

2023/06/12 - 2023/06/18

Carla API

Models

- 3D Detection: PointPillar with PointPainting

- 2D Segmentation: ERFNet

Reading

Week 24

2023/06/19 - 2023/06/25

Presentation

- Third-Year Mini Viva (Thursday, 22nd June)

- PGR Conference (Friday, 23rd June)

Reading

Week 25

2023/06/26 - 2023/07/02

Reading

Week 26

2023/07/03 - 2023/07/09

Current Progress:

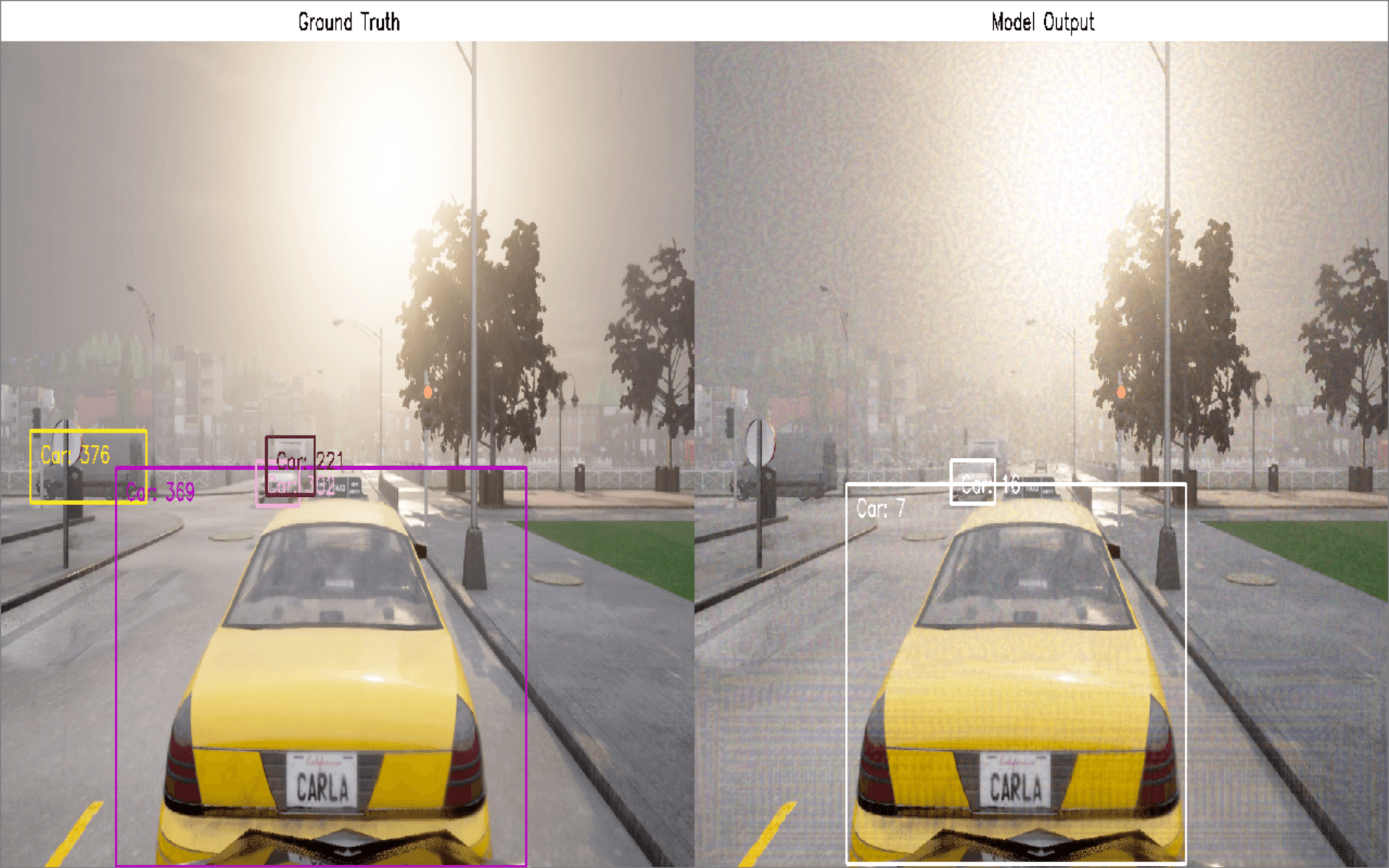

https://tracking.wuhanstudio.uk/

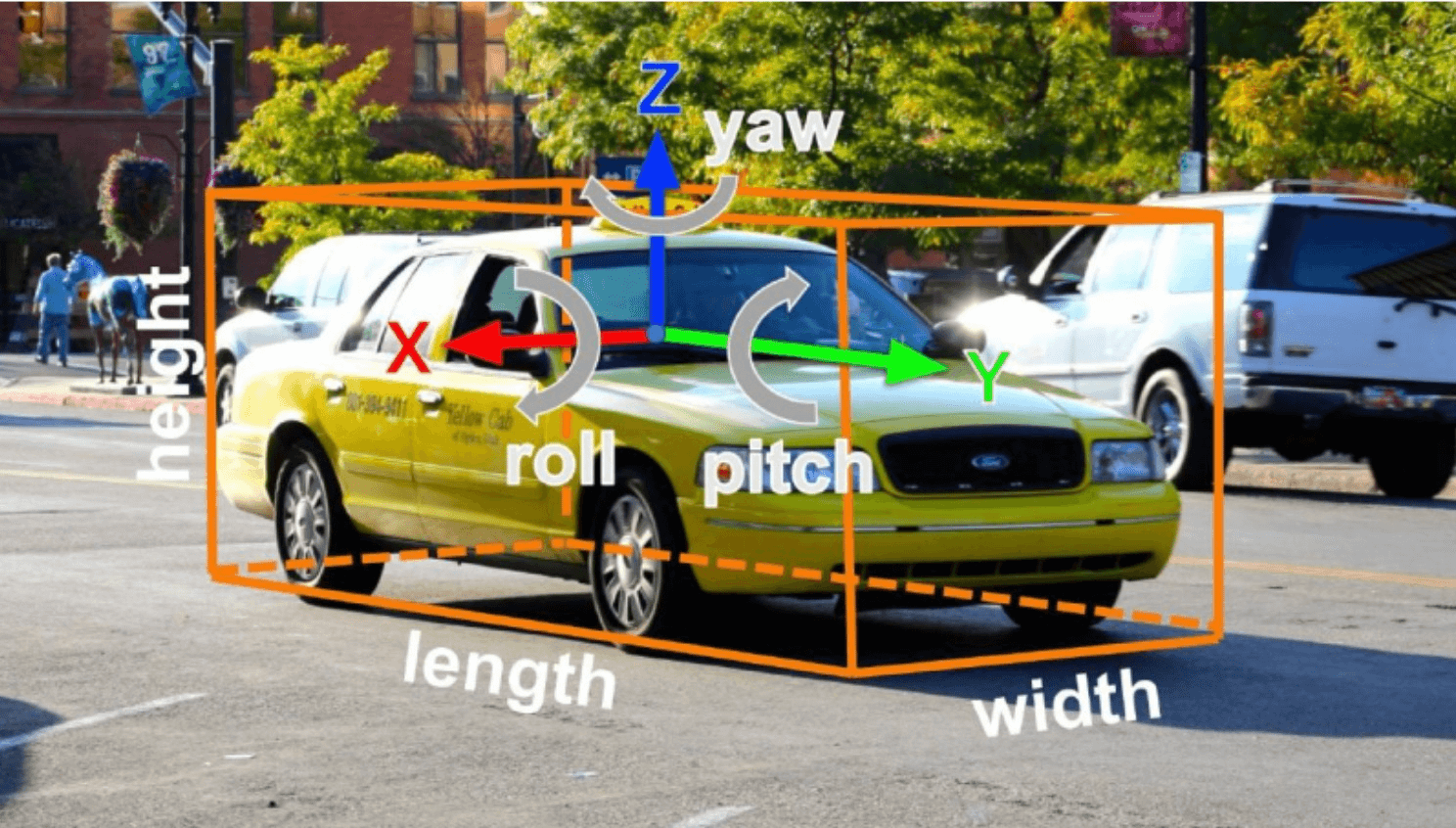

- Problem 1: How can we generate 3D Bounding Boxes? (Ground Truth)

- Problem 2: From 3D global coordinates to 2D image coordinates

- Problem 3: From 2D Bounding Boxes to 3D Bounding Boxes

- Problem 4: How can we do multi-object tracking?

- Problem 5: How can we generate UAPs?

Week 27

2023/07/10 - 2023/07/16

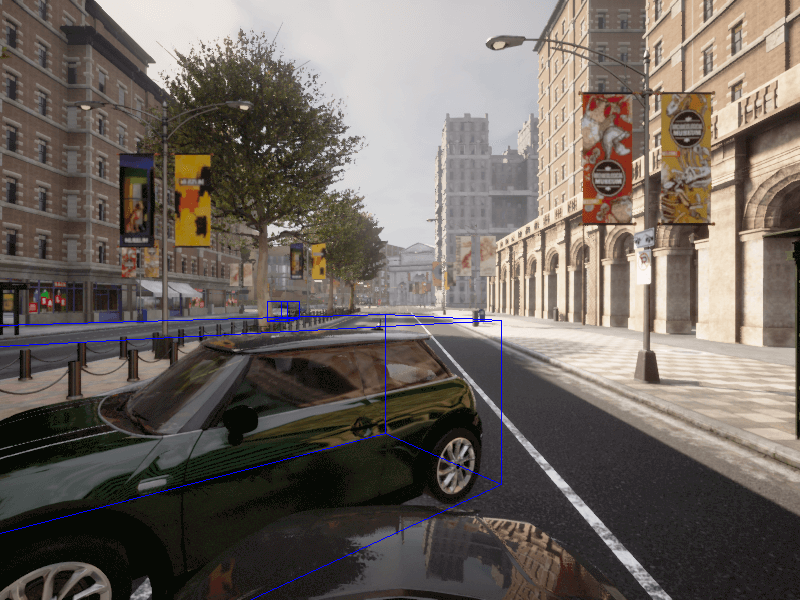

3D Object Detection in CARLA

- Pretrained on KITTI

https://github.com/skhadem/3D-BoundingBox

![]()

![]()

![]()

Week 28

2023/07/17 - 2023/07/23

3D Object Detection in CARLA

- Fixed 2D bounding boxes

Week 29

2023/07/24 - 2023/07/30

- BMVC (21st Aug. 2023)

- IEEE Transactions on AI

- AAAI Workshop (9th Dec. 2023)

- IEEE Symposium on Security and Privacy (12th Dec. 2023)

- ICLR Tiny Paper (Feb. 2024)

- ACM Workshop on AI And Security (Jul. 2024)

Week 30

2023/07/31 - 2023/08/06

2D Detection:

- YOLO

- SSD

- Faster RCNN

3D Detection:

- Orientation

- 3D Center

- Depth

Object Tracking:

- SORT

- DeepSORT

- StrongSORT

Week 31

2023/08/07 - 2023/08/13

SORT:

- Kalman Filter

- Jonker–Volgenant Algorithm

- Hungarian Algorithm

Week 32

2023/08/14 - 2023/08/20

Deep SORT:

- Feature Pyramid Network

- Siamese Neural Network

- Kalman Filter

- Jonker–Volgenant Algorithm

- Hungarian Algorithm (Matching Cascade)

Week 33

2023/08/21 - 2023/08/27

Strong SORT:

- YOLOX

- Feature Pyramid Network

- Siamese Neural Network

- Exponential Moving Average (EMA)

- Enhanced Correlation Coefficient Maximization (ECC)

- Motion Cost

- Global Linear Assignment

- NSA Kalman Filter

- Jonker–Volgenant Algorithm

- Hungarian Algorithm

Strong SORT++:

- Appearance-Free Link Model (AF Link)

- Gaussian-smoothed Interpolation Algorithm (GSI)

Week 34

2023/08/28 - 2023/09/03

Unsolved Problems:

- Pedestrian Detection / Vehicle Detection

- CARLA Dataset / KITTI Dataset

- 3D Detection

Solutions:

- Both Person and Vehicle ReID: Model Zoo.

- Bag of Tricks (BoT), Bag of Freebies (BoF), Bag of Specials (BoS)

- MOT Dataset / CARLA Demo

Three Deep Learning Models:

- 2D Object Detection (MS COCO)

- Pedestrian / Vehicle ReID (ImageNet)

- 3D Bounding Boxes [Position, Dimension, Orientation] (KITTI)

Source Code:

https://github.com/wuhanstudio/2d-carla-tracking

The Alan Turing Institute

2023/09/04 - 2023/09/15

- Defence Data Research Centre (DDRC)

- Defence Science and Technology Laboratory (Dstl)

- https://www.turing.ac.uk/events/data-study-group-september-2023

International Welcome Team

2023/09/16 - 2023/09/30

Week 2

2023/10/02 - 2023/10/08

October:

- Man-in-the-Middle: Hardware UAP

- Distributed Black-box: Initial UAP

- Adversarial Tracking: Digital UAP

- Adversarial Patch: Physical UAP

Conferences:

- Dec: Practical Deep Learning in the Wild Workshop (AAAI Canada)

- Feb: 7th Deep Learning Security Aand Privacy Workshop (IEEE S&P U.S.)

- Feb: ICLR Workshop (Vienna)

- Jul: 17th ACM Workshop on AI And Security (ACM CCS).

Week 3

2023/10/09 - 2023/10/15

- Introduction to ISCA and High Performance Computing (2 sessions 1pm – 4pm: 1st and 8th November, Training Room 4, Old Library)

- Exeter Open Research Winner Award Presentation (22nd November, 11:30am – 12:30pm, Laver LT3)

-

- One-shot Image Recognition

- Face Recognition

- Signature Verification

- Learns a similarity function

- Triplet Loss

- Contrastive Loss

| KITTI | CARLA | |

|---|---|---|

| Dataset (Ground Truth) | x | - |

| Tracking (Model Output) | x | |

| Evaluation | - | - |

| Attack |

Week 4

2023/10/16 - 2023/10/22

High Order Tracking Accuracy (HOTA)

| KITTI | CARLA | |

|---|---|---|

| Dataset (Ground Truth) | x | - |

| Tracking (Model Output) | x | x |

| Evaluation | x | - |

| Attack |

| HOTA | KITTI | CARLA |

|---|---|---|

| Ground Truth | 100.00 | |

| Ground Truth (Strong SORT) | 93.72 | |

| Ground Truth (Deep SORT) | 85.55 | |

| Ground Truth (SORT) | 84.62 | |

| CWIT | 66.31 | |

| YOLO (Strong SORT) | 55.17 | |

| YOLO (Deep SORT) | 51.99 | |

| YOLO (SORT) | 50.63 |

Siamese Tracking (SOT)

Physical Patch

Week 5

2023/10/23 - 2023/10/29

High Order Tracking Accuracy (HOTA)

| KITTI | CARLA | |

|---|---|---|

| Dataset (Ground Truth) | x | x |

| Tracking (Model Output) | x | x |

| Evaluation | x | x |

| Attack |

| HOTA | KITTI | CARLA |

|---|---|---|

| Ground Truth | 100.00 | 100.00 |

| Ground Truth (Strong SORT) | 93.72 | 89.88 |

| Ground Truth (Deep SORT) | 85.55 | 81.79 |

| Ground Truth (SORT) | 84.62 | 82.82 |

| CWIT | 66.31 | - |

| YOLO (Strong SORT) | 55.17 | 56.16 |

| YOLO (Deep SORT) | 51.99 | 53.16 |

| YOLO (SORT) | 50.63 | 53.97 |

Week 6

2023/10/30 - 2023/11/05

Cross-linking Surgery

Week 7

2023/11/06 - 2023/11/12

High Order Tracking Accuracy (HOTA)

| KITTI | CARLA | |

|---|---|---|

| Dataset (Ground Truth) | x | x |

| Tracking (Model Output) | x | x |

| Evaluation | x | x |

| Attack |

| HOTA | KITTI | CARLA |

|---|---|---|

| Ground Truth | 100.00 | 100.00 |

| Ground Truth (OC SORT) | 93.84 | 92.93 |

| Ground Truth (Strong SORT) | 93.72 | 91.19 |

| Ground Truth (Deep SORT) | 85.55 | 82.49 |

| Ground Truth (SORT) | 84.62 | 82.11 |

| 3D Lidar | ||

| PermaTrack (OC-SORT) | 76.50 | - |

| Stereo Camera | ||

| CWIT | 66.31 | - |

| Single Camera | ||

| YOLOX (OC SORT) | 53.78 | 60.40 |

| YOLOX (Strong SORT) | 54.30 | 59.60 |

| YOLOX (Deep SORT) | 52.82 | 56.97 |

| YOLOX (SORT) | 51.32 | 57.01 |

| YOLO (OC SORT) | 53.38 | 57.99 |

| YOLO (Strong SORT) | 55.17 | 59.49 |

| YOLO (Deep SORT) | 51.99 | 56.65 |

| YOLO (SORT) | 50.63 | 56.31 |

Week 8

2023/11/13 - 2023/11/19

![]()

Research Plan

- Jan. Reinforcement Learning

- Feb. Reinforcement Learning

- Mar. Research Proposal

- Apr. Man-in-the-Middle - WHAT (IEEE IV & BMVC)

- May. Distributed Black-Box - BAT (IEEE IV & BMVC)

- Jun. Carla Simulator

- Jul. 3D Detection

- Aug. Object Tracking

- Sep. Data Study Group & International Welcome Team

- Oct. Review & Evaluation

- Nov. Universal Adversarial Perturbations (IEEE TAI)

- Dec. UAPs

Week 9

2023/11/20 - 2023/11/26

- Yolov3 + Leaky ReLU + TensorFlow

- Yolov4 + mish + TensorFlow

- YoloX + SiLU + Pytorch

- Bilinear Interpolation

![]()

Week 10

2023/11/27 - 2023/12/03

- ISCA HPC

- YOLO-X

Week 11

2023/12/04 - 2023/12/10

- Computer Graphics - Marking

Week 12

2023/12/11 - 2023/12/17

1. White-box Adversarial Toolbox

-

Digital Perturbation

- Adversarial Driving

- Adversarial Detection

- [•] Man-in-the-Middle Attack

- [ ] Adversarial Tracking

-

Physical Perturbation

- [ ] Adversarial Patch

2. Black-box Adversarial Toolbox

- [•] Distributed Black-box Attack (AISec - ACM CCS)

3. Model Interpretation

- Interpretable Machine Learning for COVID-19

- Clinico-Radiological Data Assessment using Machine Learning

4. PhD Thesis

1st March, 2024 --> 24th May, 2024

Travel

2023/12/18 - 2023/12/24

- Computer Graphics

- Differentiable Physics

- Differentiable Rendering

2023/12/25 - 2023/12/31

- Exeter --> London --> Hong Kong --> Taiwan

2024/01/01 - 2024/01/07

- Taiwan --> Hong Kong --> Hangzhou

- Hangzhou --> Wuhan --> London --> Exeter

Week 1

2024/01/08 - 2024/01/14

Recovering from flu.

Week 2

2024/01/15 - 2024/01/21

- IEEE TAI Major Revision (Response Letter)

- Lab: Computers and Internet

- Workshop: Computer Vision

Week 3

2024/01/22 - 2024/01/28

- IEEE TAI Major Revision (Experimental Results)

Week 4

2024/01/29 - 2024/02/04

- IEEE TAI Major Revision (Submission)

Week 5

2024/02/05 - 2024/02/11

-

IEEE Access Major Revision (Response Letter)

Week 6

2024/02/12 - 2024/02/18

- IEEE Access Major Revision (SOTA Methods)

Week 7

2024/02/19 - 2024/02/25

- IEEE Access Major Revision (Discussion)

Week 8

2024/02/26 - 2024/03/03

- ECCV 2024 (Submission)

Week 9

2024/03/04 - 2024/03/10

- Introduction

- Chapter 2: Adversarial Driving (Published x1)

- Chapter 3: Adversarial Detection (Published x1) & Man-in-the-Middle Attack (Minor Revision x1)

- Chapter 4: Adversarial Tracking (Proposal)

- Chapter 5: Distributed Black-box Attack (Manuscript x1)

- Chapter 6: Inpterpretation and Defence (Published x2)

- Summary

- Future Research: Adversarial Patch (Proposal)

Week 13

2024/04/01 - 2024/04/07

[●] Thesis Template

[●] University Logo and Header

[●] GitHub Repo: Fix compilation errors

[●] Restructure figures and sections

[●] Abstact and Introduction

[●] Published papers and manuscripts

[●] Summary and Future Research

[●] Acknowledgements

[●] Revision

Week 18

2024/05/06 - 2024/05/12

[●] Adversarial Tracking (Intro)

[●] Adversarial Tracking (Experiments)

[●] Revision

Week 22

2024/06/03 - 2024/06/10

- Scheduling Viva Examination.

- Start looking for a Postdoc.

- Workshop: Introduction to MATLAB.

- Workshop: Parallel Computing.

- Talk Accepted: WASM on Embedded Systems, Kubecon HK.

Week 26

2024/07/01 - 2024/07/07

-

Paper Accepted: A Human-in-the-Middle Attack against Object Detection Systems, IEEE Trans on AI.

-

Ph.D. Defence: July 12th, 2024

Week 30

2024/08/01

University of Southampton - Physics and Astronomy